Oracle Integration Cloud has lot of out-of-the-box functions available. But there would be cases where the available functions do not match our requirements. In such cases, we can create custom functions and make sure that these functions are available in our mapper or integration layer for use.

The only constraint in OIC in that the custom functions need to be written in Java Script (and not Java, unlike other products like SOA and OSB) and exported as a java script library.

Registering a Library:

Let's take a simple example of generating random numbers in the provided range and using that in our integration.

Here's the java script code -

The only constraint in OIC in that the custom functions need to be written in Java Script (and not Java, unlike other products like SOA and OSB) and exported as a java script library.

Registering a Library:

Let's take a simple example of generating random numbers in the provided range and using that in our integration.

Here's the java script code -

function getRandomNum(min, max) {

var rnd = Math.floor(Math.random() * (max - min)) + min;

return rnd;

}

This function takes two input parameters - min and max values. The function will calculate random numbers between the min and max values each time.

Few points to note here -

- Logic should always be wrapped in a function, if you want it to be used in OIC.

- You always have to assign the return value to a variable and return it.

Following code will not work as intended -

return Math.floor(Math.random() * (max - min)) + min;Save the code in the first snippet as .js file (RandomNumGenerator.js) in your local machine. Now, we will register the js file as a library in OIC.

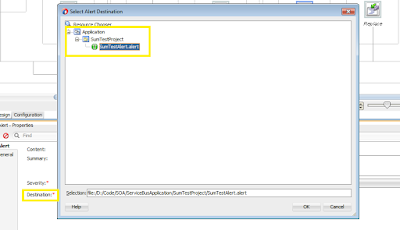

1. Login to OIC console, go to Integrations -> Libraries and click on the Register button at the top right corner.

2. In the Register Library pop up screen, choose the js file that you saved above. Give proper name and description of the custom library and click on Create.

3. Library has been registered successfully. You can now see the function you created in the left functions pane and some input and output parameters that you need to define.

4. Classification Type defines if you want to use the library to be used in orchestration or xpath. I chose orchestration.

5. Define the type of the input and output parameters, where they should be of Number, String or Boolean type.

Now the final library looks as follows. We are done with registering the library.

Invoking the library in your integration:

In this example, lets create a simple App driven orchestration based integration. This will be exposed as a synchronous REST service which will take two variables as input in query parameters and return a json response which will contain the random number.

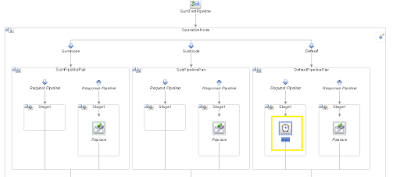

1. I have created the integration with name PG_CUSTOMFUNCTION.

2. The REST trigger (named GetRandomNumber) details looks as follows -

Resource /getRandomNum

Method GET

Query Parameters

- minVal

- maxVal

Response

Response Media Type- application/json

- { "randomVal" : "" }

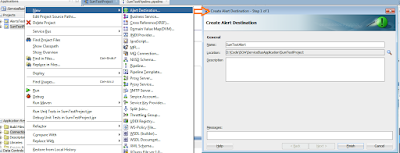

4. An editor screen of javascript opens. Click on + function to choose the javascript library that you registered.

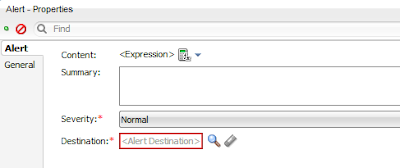

5. Now map the input values of the javascript library by clicking on the edit icon next to Value.

6. Map the Query param minVal to the js function's input min.

7. Similarly map the Query param maxVal to the js function's input max. Save and close the action window.

8. Now map the output of the js function to final output randomVal as follows.

9. Final integration looks as follows -

Activate the integration and test it. In the below REST url, I submitted the minVal as 5 an maxVal as 99999.

https://test.oracletest.com/ic/api/integration/v1/flows/rest/PG_CUSTOMFUNCTION/1.0/getRandomNum?minVal=5&maxVal=99999

I received the response in JSON as follows.

{

"randomVal" : "53152"

}

You will get different randoms values each time you test the service.